Database and Developments

Databases represent the biggest burden for enterprise realities addressing SDLC optimisation. Database Build and Release Automation are often considered as an unsolved issue and they are confined as occasional local experiments, making difficult a broader adoption of DevOps methodologies. Trying to conceptually shrink a process for SDLC: business requirements are collected, translated into proper technical requirements, arranged by releases and split in tasks. Each release will contain changes for different application components and database objects. |

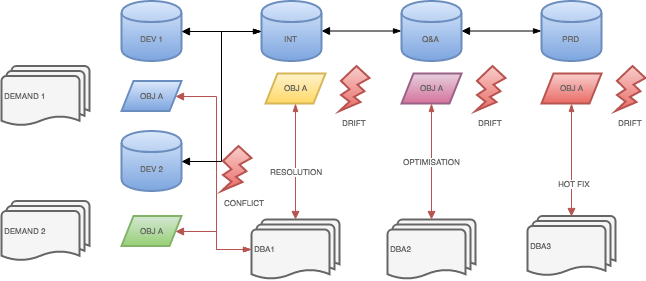

| Sample Design / Develop Process |

Data modeling

Data models are key for a software architecture and they go side by side with the data access layer. They affect the way data are organised and retrieved, thus it is necessary a careful analysis and design of data models that are often carried out through specialised tools. Those tools provide database structures for instance and may represent a common source of changes for a development tool chain.ORM and Developers

On the other side, dev teams work to consolidate data models around specific features and implement data access layers through several ORM open-source or commercial frameworks. Developers are often allowed to directly change database schemas to fit technical requirements.Demand and conflicts

Within an enterprise scenario, several demands provide business requirements. Those requirements are analysed and transformed into technical requirements that in turn may refer to conflicting resources. Such resources can be same software components or database schemas and objects.Conflicts must be solved in order to distribute coherent tasks across dev teams and maintain functioning features. Sometimes, it may happen that is required to review all way long back to business requirements in order to get rid of conflicts.

Change & Release Management

Once developments are done, it is necessary to promote changes packaged by releases. where each release package may contain several software components, database changes, etc.This process may require authorisation mechanisms, coordination among different releases, orchestration, validation of changes. Promotion of releases is a complex task since it involves different teams and responsibilities about the outcome of a deployment.

While software release automation has advanced tools able to distribute and deploy software, databases suffer because of their intrinsic nature.

|

| Sample Change & Release Process |

DBA and releases

Database application changes are traditionally managed by DBAs. A DBA is usually in charge of auditing SQL performances, authorisation grants, quality of a deployment, etc. In addition, DBAs will be in charge of database infrastructure, services, high availability, disaster recovery, parametrisation, optimisation, etc. Basically, DBAs cover a central role and they manage almost everything through manual operations.This is a big issue within an Enterprise DevOps solution since manual operations become the bottleneck for the whole lean-production.

DDL vs DML

A software development life cycle brings together data structures and software data layer components packaged by releases in order to provide higher level services. Usually relevant database design and implementation changes are related to data structure since it may affect existing data and future implementations. So, DDL is the most important part for changes, because it is going to affect basic functionalities possibly including related DML scripts.Database Lifecycle Automation

Enterprise DevOps needs automation to improve the overall process. Mainly, DBAs have a huge responsibility that is not compatible with goals a large companies want to achieve.

Essentially, DevOps is asking to fix a couple of weaknesses of the traditional process that we are going to discuss from here on.

DBA role conflicts

A DBA is in charge of database infrastructure and operations from one side and application lifecyle management on the other side. This definitely leads to a conflict and the same role would be more efficient if he/she could split responsibilities.

|

| Database Lifecycle Issues without Release Automation |

There exist many risks associated to this conflict, to mention a fews:

- SQL ownership: a DBA doesn't know about specific technical requirements and he/she is often devoted to query optimisation, authorisation grants, etc. This means that being responsible for an intermediate or final deployment whose semantic is unknown may carry to misunderstandings that can easily be translated into mistakes;

- Security: DBAs must manually review SQL scripts in order to check whether additional grants have been introduced that are not allowed. This is very important because DBAs are going to deploy through DBA grants and a wrong check may bring unwanted permissions.

- Quality: Manual deployments are obviously error-prone and DevOps tries to minimise manual operations, this is going to rapidly degrade software quality.

- Volume: Manual checks and deployments necessarily slow down the entire Software Lifecycle because Data Access Layer components and DDLs must go together. This meaning that only low volumes of changes can be managed per day.

- Standardisation: automatism means standardisation first. The more a process is standardised, the fastest your company will improve quality.

- Costs: any leak of time and capacity is a cost. It is unacceptable a manual process for a regular deployment.

Build & Release Automation

Database Lifecycle Management can be introduced through DevOps methodologies by means of standardisation and automation.

An enterprise DevOps tool able to account for database build & release automation should have at least the following abilities:

|

| Database Lifecycle Management with Release Automation |

- Source Control: definition of roles and duties, ability to control access to database resources, audit and trace any operation on databases. RBAC should be your favorite choice when selecting a tool for database build & release automation.

- Compliance: standardisation means being compliant with quality standards of a certain company plus any other measure necessary to keep a system safe. For instance, checking whether SQL scripts inject unwanted GRANTS, validating whether some storage clauses are included or not, environment-based enforced scheduling for deployments (i.e. nightly, on sunday), etc.

- Schema (and Data) Versioning: ability to store and keep trace of schema changes. This is a crucial point that makes the difference between a trivial mechanism and an enterprise one. A schema represents the current status of database objects for a certain application. Versioning scripts to keep in mind a schema is dangerous from a database perspective and cannot guarantee the same result. This happens for several reasons: sorting out scripts in different ways may result in a different schema, so order matters; even using SVN, Git or other control version systems to keep trace of changes, there is no chance to rebuild and validate the same sequence of scripts without a cumbersome orchestration. Schemas are what we want to really trace and store. That's why we should rely on DevOps tools able to versioning database schemas. Data may be useful whenever data represent configurations at application level.

- Script Building (Reliable Promote/Rollback): ability to build a script that reproduces all necessary changes between two schemas. That is, if you have two different versions of the same schema, you can create a script to bring all changes from the original version to another one. Think about schema version 1.5 in DEV and schema version 1.0 in INTEGRATION. Since relying on development scripts is not a good idea, having a mechanism that automatically builds the difference is a MUST.

Further, this is basically the only reliable way to rollback and recover from unstable developments. In fact, if you have an engine able to automatically build differences, it is possible to build a rollback on the fly and repeatedly.

External links:

This article is a creative one and the concept is good to enhance our knowledge. Waiting for more updates.

RispondiEliminaDevops Online Course

Best Devops Online Training

Learn Devops Online